In this week’s lab meeting, we discussed the paper Neural Network Poisson Models for Behavioural and Neural Spike Train Data, which had been presented by Khajehnejad, Habibollahi, Nock, Arabzadeh, Dayan, and Dezfouli at ICML, 2022. This work aimed to introduce an end-to-end model that explained how the brain represents past and present sensory inputs across areas, and how these representations evolve over time and ultimately lead to behavior. While numerous supervised, reinforcement learning, and unsupervised point process-based methods do exist for examining the relationship between neural activity and behavior, these methods in general have several shortcomings including being insufficient to capture complex neural representations of inputs and actions distributed across different brain regions, not accounting for trial-to-trial variability in behavior and neural recordings, and being sensitive to the choice of bin size of spike counts.

Addressing these limitations, here the authors introduced a novel neural network Poisson process model which models a canonical visual discrimination experiment whereby, on each trial, subjects are presented with a stimulus and have to choose an option (or keep still; i.e. NoGo). The main contributions of this approach can be summarized as follows.

- Handles variability between response times across different trials of an experiment by a temporal re-scaling mechanism:

- For each trial

, they considered spikes from unit

until either a response

was made at time

, or to the end of time window

, whichever had come first, i.e., up to

. The reason for restricting the trial duration accordingly had been to model the neural processes that led to behavioral responses, rather than what happened post-response. To handle the variability of

across different trials, they proposed to re-scale the original spike times

using a trial-specific monotonic function

,

. Following this transformation, the latent neural intensity function of the inhomogeneous Poisson point process of unit

at trial

and time

given by

, where

is the stimulus embedding, can be related to the corresponding canonical (trial independent) intensity function

by

. This facilitates the estimation of a single function

to model the neuronal spiking activities across all trials while preserving details about the variability of response times across different trials. To simplify the subsequent derivations, they assumed this transformation to be linear:

.

- For each trial

- Flexibly learns (without any assumptions on the functional form) the connections between environmental stimuli and neural representations, and between neural representations and behavioral responses:

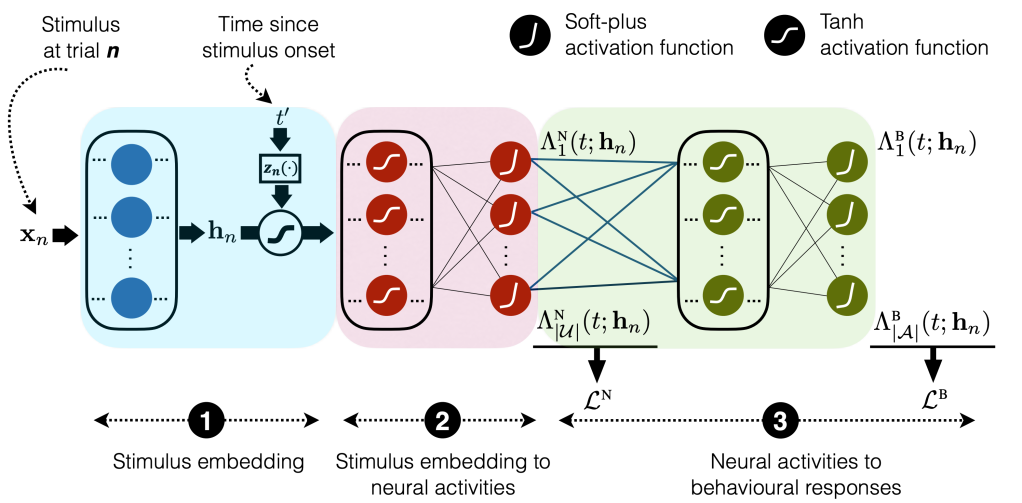

- The following figure shows the model that they proposed to achieve this task, which is a neural network with three main components. The first maps the stimulus

that was presented at trial

through a series of fully connected layers to realize an input embedding denoted by

. The second component takes the embedding

and the spike times

since the stimulus onset and outputs the modeled activity of each neural region

at time

in the form of the cumulative intensity function

. The neural intensity function

is then obtained by differentiating

with respect to

. The motivation for parameterizing the cumulative intensity functions instead of directly parameterizing the neural intensity function had been to obviate the computation of the intractable integral in the point process likelihood. The third component of the model takes the neural cumulative intensity functions and maps them to the behavioral cumulative intensity functions

for making each action

at each time

since the stimulus onset.

- The following figure shows the model that they proposed to achieve this task, which is a neural network with three main components. The first maps the stimulus

- Jointly fits both behavioral and neural data:

- They used the neural loss function

to train all the weights from stimulus to neural cumulative intensity functions (blue and red rectangles in the following figure):

, where

, and

is the spike time relative to the stimulus onset of the

spiking event of unit

at trial

. Given these trained neural cumulative intensity functions, then the weights connecting neural outputs to behavioral outputs (green rectangle in the following figure) had been trained using

, where

, and

is the set of trials on which action

was taken before

.

- They used the neural loss function

- Derives spike count statistics disentangled from chosen temporal bin sizes:

- Since the aforementioned learning process directly uses the spike times as inputs instead of spike counts, this inference is independent of the selection of a time bin for spike count calculations.

Finally, they applied this method to two neural/behavioral datasets concerning visual discrimination tasks: one collected using Neuropixel probes (Steinmetz et al., 2019) from mice, and the other the output of a hierarchical network model with reciprocally connected sensory and integration circuits that modeled behavior in a motion-based task (Wimmer et al., 2015). They showed that this method can link behavioral data with their underlying neural processes and input stimuli in both cases and that it outperforms several existing baseline point process estimators.