To compensate for the lack of recent activity, here’s a link to a recent blog post from lab member Rich Pang, which provides a nice overview of different regularization methods, along with geometric intuition into how they work: Using regularization to handle correlated predictors

Author Archives: jpillow

Inferring synaptic plasticity rules from spike counts

In last week’s computational & theoretical neuroscience journal club I presented the following paper from Nicolas Brunel’s group:

Inferring learning rules from distributions of firing rates in cortical neurons.

Lim, McKee, Woloszyn, Amit, Freedman, Sheinberg, & Brunel.

Nature Neuroscience (2015).

The paper seeks to explain experience-dependent changes in IT cortical responses in terms of an underlying synaptic plasticity rule. Continue reading

Attractors, chaos, and network dynamics for short term memory

In a recent lab meeting, I presented the following paper from Larry Abbott’s group:

From fixed points to chaos: Three models of delayed discrimination.

Barak, Sussillo, Romo, Tsodyks, & Abbott,

Progress. in Neurobiology 103:214-22 2013.

The paper seeks to connect the statistical properties of neurons in pre-frontal cortex (PFC) during short-term memory with those exhibited by several dynamical models for neural population responses. In a sense, it can be considered a follow-up to Christian Machens’ beautiful 2005 Science paper [2], which showed how a simple attractor model could support behavior in a two-interval discrimination task. The problem with the Machens/Brody/Romo account (which relied on mutual inhibition between two competing populations) is that it predicts extremely stereotyped response profiles, with all neurons in each population exhibiting the same profile. Continue reading

Code released for “Binary Pursuit” spike sorting

At long last, I’ve finished cleaning, commenting, and packaging up code for binary pursuit spike sorting, introduced in our 2013 paper in PLoS ONE. You can download the Matlab code here (or on github), and there’s a simple test script to illustrate how to use it on a simulated dataset.

The method relies on a generative model (of the raw electrode data) that explicitly accounts for the superposition of spike waveforms. This allows it to detect synchronous and overlapping spikes in multi-electrode recordings, which clustering-based methods (by design) fail to do.

If you’d like to know more (but don’t feel like reading the paper), I wrote a blog post describing the basic intuition (and the cross-correlation artifacts that inspired us to develop it in the first place) back when the paper came out (link).

Subunit models for characterizing responses of sensory neurons

On July 28th, I presented the following paper in lab meeting:

- Efficient and direct estimation of a neural subunit model for sensory coding,

Vintch, Zaharia, Movshon, & Simoncelli, NIPS 2012

This paper proposes a new method for characterizing the multi-dimensional stimulus selectivity of sensory neurons. The main idea is that, instead of thinking of neurons as projecting high-dimensional stimuli into an arbitrary low-dimensional feature space (the view underlying characterization methods like STA, STC, iSTAC, GQM, MID, and all their rosy-cheeked cousins), it might be more useful / parsimonious to think of neurons as performing a projection onto convolutional subunits. That is, rather than characterizing stimulus selectivity in terms of a bank of arbitrary linear filters, it might be better to consider a subspace defined by translated copies of a single linear filter.

Computational Vision Course 2014 @ CSHL

Yesterday marked the start of the 2014 summer course in COMPUTATIONAL NEUROSCIENCE: VISION at Cold Spring Harbor. The course was founded in 1985 by Tony Movshon and Ellen Hildreth, with the goal of inspiring new generations of students to address problems at the intersection of vision, computation, and the brain. The list of past attendees is impressive.

new paper: detecting overlapped spikes

I’m proud to announce the publication of our “zombie” spike sorting paper (Pillow, Shlens, Chichilnisky & Simoncelli 2013), which addresses the problem of detecting overlapped spikes in multi-electrode recordings.

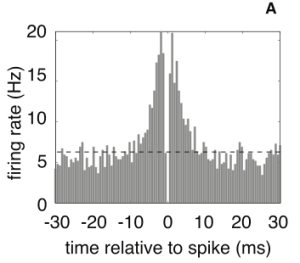

The basic problem we tried to address is that standard “clustering” based spike-sorting methods often miss near-synchronous spikes. As a result, you get cross-correlograms that look like this:

When I first saw these correlograms (back in 2005 or so), I thought: “Wow, amazing —retinal ganglion cells inhibit each other with 1-millisecond precision! Should we send this to Nature or Science?” My more sober experimental colleagues pointed out that that this was likely only a (lowly) spike sorting artifact. So we set out to address the problem (leading to the publication of this paper a mere 8 years later!)

Sensory Coding Workshop @ MBI

This week, Memming and I are in Columbus, Ohio for a workshop on “Sensory and Coding”, organized by Brent Doiron, Adrienne Fairhall, David Kleinfeld, and John Rinzel.

Monday was “Big Picture Day”, and I gave a talk about Bayesian Efficient Coding, which represents our attempt to put Barlow’s Efficient Coding Hypothesis in a Bayesian framework, with an explicit loss function to specify what kinds of posteriors are “good”. One of my take-home bullet points was that “you can’t get around the problem of specifying a loss function”, and entropy is no less arbitrary than other choice. This has led to some stimulating lunchtime discussions with Elad Schneidman, Surya Ganguli, Stephanie Palmer, David Schwab, and Memming over whether entropy really is special (or not!).

It’s been a great workshop so far, with exciting talks from a panoply of heavy hitters, including Garrett Stanley, Steve Baccus, Fabrizio Gabbiani, Tanya Sharpee, Nathan Kutz, Adam Kohn, and Anitha Pasupathy. You can see the full lineup here:

http://mbi.osu.edu/2012/ws6schedule.html

Cosyne 2013

We’ve recently returned from Utah, where several of us attended the 10th annual Computational and Systems Neuroscience (CoSyNe) Annual Meeting. It’s hard to believe Cosyne is ten! I got to have a little fun with the opening-night remarks, noting that Facebook and Cosyne were founded only a month apart in Feb/March 2004, with impressive aggregate growth in the years since:

The meeting kicked off with a talk from Bill Bialek (one of the invited speakers for the very first Cosyne—where he gave a chalk talk!), who provoked the audience with a talk entitled “Are we asking the right questions.” His answer (“no”) focused in part on the issue of what the brain is optimized for: in his view, for extracting information that is useful for predicting the future.

In honor of the meeting’s 10th anniversary, three additional reflective/provocative talks on the state of the field were contributed by Eve Marder, Terry Sejnowski, and Tony Movshon. Eve spoke about how homeostatic mechanisms lead to “degenerate” (non-identifiable) biophysical models and confer robustness in neural systems. Terry talked about the brain’s sensitivity to “suspicious coincidences” of spike patterns and the recent BAM proposal (which he played a central part in advancing). Tony gave the meeting’s final talk, a lusty defense of primate neurophysiology against the advancing hordes of rodent and invertebrate neuroscience, arguing that we will only understand the human brain by studying animals with sufficiently similar brains.

See Memming’s blog post for a summary of some of the week’s other highlights. We had a good showing this year, with 7 lab-related posters in total:

- I-4. Semi-parametric Bayesian entropy estimation for binary spike trains. Evan Archer, Il M Park, & Jonathan W Pillow. [oops—we realized after submitting that the estimator is not *actually* semi-parametric; live and learn.]

- I-14. Precise characterization of multiple LIP neurons in relation to stimulus and behavior. Jacob Yates, Il M Park, Lawrence Cormack, Jonathan W Pillow, & Alexander Huk.

- I-28. Beyond Barlow: a Bayesian theory of efficient neural coding. Jonathan W Pillow & Il M Park.

- II-6. Adaptive estimation of firing rate maps under super-Poisson variability. Mijung Park, J. Patrick Weller, Gregory Horwitz, & Jonathan W Pillow.

- II-14. Perceptual decisions are limited primarily by variability in early sensory cortex. Charles Michelson, Jonathan W Pillow, & Eyal Seidemann

- II-94. Got a moment or two? Neural models and linear dimensionality reduction. Il M Park, Evan Archer, Nicholas Priebe, & Jonathan W Pillow

- II-95. Spike train entropy-rate estimation using hierarchical Dirichlet process priors. Karin Knudson & Jonathan W Pillow.

Lab Meeting 2/11/2013: Spectral Learning

Last week I gave a brief introduction to Spectral Learning. This is a topic I’ve been wanting to know more about since reading the (very cool) NIPS paper from Lars Buesing, Jakob Macke and Maneesh Sahani, which describes a spectral method for fitting latent variable models of multi-neuron spiking activity (Buesing et al 2012). I presented some of the slides (and a few video demos) from the spectral learning tutorial organized by Geoff Gordon, Byron Boots, and Le Song at AISTATS 2012.

So, what is spectral learning, and what is it good for? The basic idea is that we can fit latent variable models very cheaply using just the eigenvectors of a covariance matrix, computed directly from the observed data. This stands in contrast to “standard” maximum likelihood methods (e.g., EM or gradient ascent), which require expensive iterative computations and are plagued by local optima.

Year in Review: 2012

We’re now almost a month into 2013, but I wanted to post a brief reflection on our lab highlights from 2012.

Ideas / Projects:

Here’s a summary of a few of the things we’ve worked on:

• Active Learning – Basically: these are methods for adaptive, “closed-loop” stimulus selection, designed to improve neurophysiology experiments by selecting stimuli that tell you the most about whatever it is you’re interested in (so you don’t waste time showing stimuli that don’t reveal anything useful). Mijung Park has made progress on two distinct active learning projects. The first focuses on estimating linear receptive fields in a GLM framework (published in NIPS 2012): the main advance is a particle-filtering based method for doing active learning under a hierarchical receptive field model, applied specifically with the “ALD” prior that incorporates localized receptive field structure (an extension of her 2011 PLoS CB paper). The results are pretty impressive (if I do say so!), showing substantial improvements over Lewi, Butera & Paninski’s work (which used a much more sophisticated likelihood). Ultimately, there’s hope that a combination of the Lewi et al likelihood with our prior could yield even bigger advances.

The second active learning project involves a collaboration with Greg Horwitz‘s group at U. Washington, aimed at estimating the nonlinear color tuning properties of neurons in V1. Here, the goal is to estimate an arbitrary nonlinear mapping from input space (the 3D space of cone contrasts, for Greg’s data) to spike rate, using a Gaussian Process prior over the space of (nonlinearly transformed) tuning curves. This extends Mijung’s 2011 NIPS paper to examine the role of the “learning criterion” and link function in active learning paradigms (submitted to AISTATS) and to incorporate response history and overdispersion (which occurs when spike count variance > spike count mean) (new work to be presented at Cosyne 2013). We’re excited that Greg and his student Patrick Weller have started collecting some data using the new method, and plan to compare it to conventional staircase methods.

• Generalized Quadratic Models – The GQM is an extension of the GLM encoding model to incorporate a low-dimensional quadratic form (as opposed to pure linear form) in the first stage. This work descends directly from our 2011 NIPS paper on Bayesian Spike-Triggered Covariance, with application to both spiking (Poisson) and analog (Gaussian noise) responses. One appealing feature of this setup is the ability to connect maximum likelihood estimators with moment-based estimators (response-triggered average and covariance) via a trick we call the “expected log-likelihood“, an idea on which Alex Ramirez and Liam Paninski have also done some very elegant theoretical work.

Basically, what’s cool about the GQM framework is that it combines a lot of desirable things: (1) ability to estimate a neuron’s (multi-dimensional, nonlinear) stimulus dependence very quickly when stimulus distribution is “nice” (like STC and iSTAC); (2) achieve efficient performance when stimulus distribution isn’t “nice” (like MID / maximum-likelihood); (3) incorporate spike history (like GLM), but with quadratic terms (making it more flexible than GLM, and unconditionally stable); (4) apply to both spiking and analog data. The work clarifies theoretical relationships between moment-based and likelihood-based formulations (novel, as far as we know, for the analog / Gaussian noise version). This is joint work with Memming, Evan & Nicholas Priebe. (Jonathan gave a talk at SFN; new results to be presented at Cosyne 2013).

• Modeling decision-making signals in parietal cortex (LIP) – encoding and decoding analyses of the information content of spike trains in LIP, using a generalized linear model (with Memming and Alex Huk; presented in a talk by Memming at SFN 2012). Additional work by Kenneth and Jacob on Bayesian inference for “switching” and “diffusion to bound” latent variable models for LIP spike trains, using MCMC and particle filtering (also presented at SFN 2012).

• Non-parametric Bayesian models for spike trains / entropy estimation – Evan Archer, Memming Park and I have worked on extending the popular “Nemenman-Schafee-Bialek” (NSB) entropy estimator to countably-infinite distributions (i.e., cases where one doesn’t know the true number of symbols). We constructed novel priors using mixtures of Dirichlet Processes and Pitman-Yor Processes, arriving at what we call a Pitman-Yor Mixture (PYM) prior; the resulting Bayes least-squares entropy estimator is explicitly designed to handle data with power-law tails (first version published in NIPS 2012.) We have some new work in this vein coming up at Cosyne, with Evan & Memming presenting a poster that models multi-neuron spike data with a Dirichlet process centered on a Bernoulli model (i.e., using a Bernoulli model for the base distribution of the DP). Karin Knudson will present a poster about using the hierarchical Dirichlet process (HDP) to capture the Markovian structure in spike trains and estimate entropy rates.

• Bayesian Efficient Coding – new normative paradigm for neural coding, extending Barlow’s efficient coding hypothesis to a Bayesian framework. (Joint work with Memming: to appear at Cosyne 2013).

• Coding with the Dichotomized Gaussian model – Ozan Koyluoglu has been working to understand the representational capacity of the DG model, which provides an attractive alternative to the Ising model for describing the joint dependencies in multi-neuron spike trains. The model is known in the statistics literature as the “multivariate probit”, and it seems there should be good opportunities for cross-pollination here.

• Other ongoing projects include spike-sorting (with Jon Shlens, EJ Chichilnisky, & Eero Simoncelli), prior elicitation in Bayesian ideal observer models (with Ben Naecker), model-based extensions of the MID estimator for neural receptive fields (with Ross Williamson and Maneesh Sahani), Bayesian models for biases in 3D motion perception (with Bas Rokers), models of joint choice-related and stimulus-related variability in V1 (with Chuck Michelson & Eyal Seidemann; to be presented at Cosyne 2013), new models for psychophysical reverse correlation (with Jacob Yates), and Bayesian inference methods for regression and factor analysis in neural models with negative binomial spiking (with James Scott, published in NIPS 2012).

Conferences: We presented our work this year at: Cosyne (Feb: Salt Lake City & Snowbird), CNS workshops (July: Atlanta), SFN (Oct: New Orleans), NIPS (Dec: Lake Tahoe).

Reading Highlights:

- In the fall, we continued (re-started) our reading group on Non-parametric Bayesian models, focused in particular on models of discrete data based on the Dirichlet Process, in particular: Hierarchical Dirichlet Processes (Teh et al) and the Sequence Memoizer (Wood et al).

- Kenneth has introduced us to Riemannian Manifold HMC and Hybrid Monte Carlo and some other fancy Bayesian inference methods, and is preparing to tell us about some implementations (using CUDA) that allow them to run super fast on the GPU (if you have an nvidia graphics card).

- We enjoyed reading Simon Wood’s paper (Nature 2010), about “simulated likelihood methods” for doing statistical inference in systems with chaotic dynamics. Pretty cool idea, related to the Method of Simulated Moments, that he applies to some crazy chaotic (but simple) nonlinear models from population ecology. Seems like an approach that might be useful for neuroscience applications (where we also have biophysical models described by nonlinear ODEs for which inference difficult!)

Milestones:

- Karin Knudson: new lab member (Ph.D. student in mathematics), joined during fall semester.

- Kenneth Latimer & Jacob Yates: passed INS qualifying exams

- Mijung Park: passed Ph.D. qualifying exam in ECE.

NP Bayes reading group (9/27): hierarchical DPs

Our second NPB reading group meeting took aim at the seminal 2006 paper (with >1000 citations!) by Teh, Jordan, Beal & Blei on Hierarchical Dirichlet Processes. We were joined by newcomers Piyush Rai (newly arrived SSC postdoc), and Ph.D. students Dan Garrette (CS) and Liang Sun (mathematics), both of whom have experience with natural language models.

We established a few basic properties of the hierarchical DP, such as the the fact that it involves creating dependencies between DPs by endowing them with a common base measure, which is itself sampled from a DP. That is:

(“global measure” sampled from DP with base measure

and concentration

).

(sequence of conditionally independent random measures with common base measure

, e.g.,

are distributions over clusters from data collected on different days)

Beyond this, we got bogged down in confusion over metaphors and interpretations, unclear whether ‘s were topics or documents or tables or restaurants or ethnicities, and were hampered by having two different version of the manuscript floating around with different page numbers and figures.

This week: we’ll take up where we left off, focusing on Section 4 (“Hierarchical Dicirhlet Processes”) with discussion led by Piyush. We’ll agree to show up with the same (“official journal”) version of the manuscript, available: here.

Time: 4:00 PM, Thursday, Oct 4.

Location: SEA 5.106

Please email pillow AT mail.utexas.edu if you’d like to be added to the announcement list.

Revivifying the NP Bayes Reading Group

After a nearly 1-year hiatus, we’ve restarted our reading group on non-parametric (NP) Bayesian methods, focused on models for discrete data based on generalizations of the Dirichlet and other stick-breaking processes.

Thursday (9/20) was our first meeting, and Karin led a discussion of:

Teh, Y. W. (2006). A hierarchical Bayesian language model based on Pitman-Yor

processes. Proceedings of the 21st International Conference on

Computational Linguistics and the 44th annual meeting of the

Association for Computational Linguistics. 985-992

In the first meeting, we made it only as far as describing the Pitman-Yor (PY) process, a stochastic process whose samples are random probability distributions, and two methods for sampling from it:

- Chinese Restaurant sampling (aka “Blackwell-MacQueen urn scheme”), which directly provides samples

from distribution

with G marginalized out.

- Stick-breaking, which samples the distribution

explicitly, using iid draws of Beta random variables to obtain stick weights

.

We briefly discussed the intuition for the hierarchical PY process, which uses PY process as base measure for PY process priors at deeper levels of the hierarchy (applied here to develop an n-gram model for natural language).

Next week: We’ve decided to go a bit further back in time to read:

Teh, Y. W.; Jordan, M. I.; Beal, M. J. & Blei, D. M. (2006). Hierarchical dirichlet processes. Journal of the American Statistical Association 101:1566-1581.

Time: Thursday (9/27), 4:00pm.

Location: Pillow lab

Presenter: Karin

note: if you’d like to be added to the email announcement list for this group, please send email to pillow AT mail.utexas.edu.

NIPS 2011 summary

See Memming’s post on NIPS 2011 highlights.

I’m still hoping to post my own list of highlights, but may have to wait until after the flurry of Cosyne-related review activity subsides.

Talking about our LIP modeling work at CUNY (11/29)

Tomorrow I’ll be speaking at a Symposium on Minds, Brains and Models at City University of New York, the third in a series organized by Bill Bialek. I will present some of our recent work on model-based approaches to understanding the neural code in parietal cortex (area LIP), which is joint work with Memming, Alex Huk, Miriam Meister, & Jacob Yates.

Encoding and decoding of decision-related information from spike trains in parietal cortex (12:00 PM )

Looks to be an exciting day, with talks from Sophie Deneve, Elad Schneidman & Gasper Tkacik.

new paper in PLoS Comp Bio

Our paper came out online today!

Receptive Field Inference with Localized Priors

M. Park & J.W. Pillow

PLoS Comput Biol 7(10). (2011).

Great to see it in print. Strangely, PLoS CB doesn’t send galley proofs, and they introduced a small error in one of the figs. Hopefully they’ll agree to fix it…

Lab Meeting 10/26/2011

This week, Ozan presented a recent paper from Matthias Bethge’s group:

A. S. Ecker, P. Berens, A. S. Tolias, and M. Bethge

The effect of noise correlations in populations of diversely tuned neurons

The Journal of Neuroscience, 2011

The paper describes an analysis of the effects of correlations on the coding properties of a neural population, analyzed using Fisher information. The setup is that of a 1D circular stimulus variable (e.g., orientation) encoded by a population of N neurons defined by a bank of tuning curves (specifying the mean of each neuron’s response), and a covariance matrix describing the correlation structure of additive “proportional” Gaussian noise.

The authors find that when the tuning curves are heterogeneous (i.e., not shifted copies of a single Gaussian bump), then noise correlations do not reduce Fisher information. So correlated noise is not necessarily harmful. This seems surprising in light of a bevy of recent papers showing that the primary neural correlate of perceptual improvement (due to learning, attention, etc.) is a reduction in noise correlations. (So Matthias, what is going on??).

It’s a very well written paper, very thorough, with gorgeous figures. And I think it sets a new record for “most equations in the main body of a J. Neuroscience paper”, at least as far as I’ve ever seen. Nice job, guys!

Lab Meeting, 10/12/11

This week we discussed a recent paper from Anne Churchland and colleagues:

Variance as a Signature of Neural Computations during Decision Making,

Anne. K. Churchland, R. Kiani, R. Chaudhuri, Xiao-Jing Wang, Alexandre Pouget, & M.N. Shadlen. Neuron, 69:4 818-831 (2011).

This paper examines the variance of spike counts in area LIP during the “random dots” decision-making task. While much has been made of (trial-averaged) spike rates in these neurons (specifically, the tendency to “ramp” linearly during decision-making), little has been made of their variability.

The paper’s central goal is to divide the net spike count variance (measured in 60ms bins) into two fundamental components, in accordance with a doubly stochastic modulated renewal model of the response. We can formalize this as follows: let denote the external (“task”) variables on a single trial (motion stimulus, saccade direction, etc), let

denote the time-varying (“command”) spike rate on that trial, and let

represent the actual (binned) spike counts. The model specifies the final distribution over spike counts

in terms of two underlying distributions (hence “doubly stochastic”):

– the distribution over rate given the task variables. This is the primary object of interest;

is the “desired” rate that the neuron uses to encode the animal’s decision on a particular trial.

– the distribution over spike counts given a particular spike rate. This distribution represents “pure noise” reflecting the Poisson-like spiking variability in spike time arrivals, and is to be disregarded / averaged out for purposes of the quantities computed by LIP neurons.

So we can think of this as a kind of “cascade” model: , where each of those arrows implies some kind of noisy encoding process.

The law of total variance states essentially that the total variance of is the sum of the “rate” variance of

and the average point-process variance

, averaged across

. Technically, the first of these (the quantity of interest here) is called the “variance of the conditional expectation” (or varCE, as it says on the t-shirt)—this terminology comes from the fact that

is the conditional expectation of

, and we’re interested in its variability, or

. The approach taken here is to assume that spiking process

is governed by a (modulated) renewal process, meaning that there is a linear relationship between

and the variance of

. That is,

. For a Poisson process, we would have

, since variance is equal to mean.

The authors’ approach to data analysis in this paper is as follows:

- estimate

from data, identifying it with the smallest Fano factor observed in the data. (This assumes that

is zero at this point, so the observed variability is only due to renewal spiking, and ensures varCE is never negative.)

- Estimate

as

in each time bin.

The take-home conclusion is that the variance of (i.e., the varCE), is consistent with

evolving according to a drift-diffusion model (DDM): it grows linearly with time, which is precisely the prediction of the DDM (aka “diffusion to bound” or “bounded accumulator” model, equivalent to a Wiener process plus linear drift). This rules out several competing models of LIP responses (e.g., a time-dependent scaling of i.i.d. Gaussian response noise), but is roughly consistent with both the population coding framework of Pouget et al (‘PPC’) and a line attractor model from XJ Wang. (This sheds some light on the otherwise miraculous confluence of authors on this paper, for which Anne surely deserves high diplomatic honors).

Comments:

- The assumption of renewal process variability (variance proportional to rate with fixed ratio

) seems somewhat questionable for real neurons. For a Poisson neuron with absolute refractory period, or a noisy integrate-and-fire neuron, the variance in

will be an upside-down

-shaped function of spike rate: variance will increase with rate up to a point, but will then go down again to zero as the spike rate bumps up against the refractory period. This would substantially affect the estimates of varCE at high spike rates (making it higher than reported here) This doesn’t seem likely to threaten any of the paper’s basic conclusions here, but it seems a bit worrying to prescribe as a general method.

- Memming pointed out that you could explicitly fit the doubly-stochastic model to data, which would get around making this “renewal process” assumption and provide a much more powerful descriptive model for analyzing the code in LIP. In other words: from the raw spike train data, directly estimate parameters governing the distributions

and

. The resulting model would explicitly specify the stochastic spiking process given

, as well as the distribution over rates

given

. Berry & Meister used a version of this in their 1998 JN paper, referred to as a “free firing rate model”: assume that the stimulus gives rise to some time-varying spike rate, and that the spikes are then governed by a point process (e.g. renewal, Poisson with refractory period, etc) with that given rate. This would allow you to look at much more than just variance (i.e., you have access to any higher moments you want, or other statistics like ISI distributions), and do explicit model comparison.

- General philosophical point: the doubly stochastic formulation makes for a nice statistical model, but it’s not entirely clear to me how to interpret the two kinds of stochasticity. Specifically, is the variability in

due to external noise in the moving dots stimulus itself (which contains slightly different dots on each trial), or noise in the responses of earlier sensory neurons that project to LIP? If it’s the former, then

should be identical across repeats with the same noise dots. If the latter, then it seems we don’t want to think of

as pure signal—it reflects point process noise from neurons earlier in the system, so it’s not clear what we gain by distinguishing the two kinds of noise. (Note that the standard PPC model is not doubly stochastic in this sense—the only noise between

, the external quantity of interest, and

, the spike count, is the vaunted “exponential family with linear sufficient statistics” noise; PPC tells how to read out the spikes to get a posterior distribution over

, not over

).

To sum up, the paper shows an nice analysis of spike count variance in LIP responses, and a cute application of the law-of-total-variance. The doubly stochastic point process model of LIP responses seems ripe for more analysis.

Methods in Computational Neuroscience @ Woods Hole

Next week, Evan Archer and I are off to Woods Hole, MA for the Methods in Computational Neuroscience (MCN 2011) course, organized by Adrienne Fairhall (U. Washington) and Michael Berry (Princeton).

You can get a sense of what we’ll be up to from the provisional schedule (schedule.pdf). I lecture during the first week, which will focus on “neural coding”. After that, I head back to the simmering Texas Cauldron, but Evan gets to stay for the whole month—lucky Evan! Be careful not to O.D. on bioluminescence…

Memming to speak at Joint Statistical Mtg (Aug 1, 11)

If you happen to be in Miami Beach, FL and have had enough of sun, sand and Art Deco, come hear Memming speak about “Spike Train Kernel Methods for Neuroscience” at the JSM 2011, in a Monday session on Statistical Modeling of Neural Spike, organized by Dong Song (USC) and Haonan Wang (Colorado State).

Memming will speak about kernel-based methods for clustering, decoding, and computing distances between spike trains, which he started during his Ph.D. at U. Florida with José Príncipe.